RAG Node

Overview

The RAG (Retrieval-Augmented Generation) Node is a sophisticated AI component that combines information retrieval with text generation. It first retrieves relevant information from a knowledge base or vector database, then uses that context to generate more accurate and informed responses.

Node Type Information

| Type | Description | Status |

|---|---|---|

| Batch Trigger | Starts the flow on a schedule or batch event. Ideal for periodic data processing. | ❌ False |

| Event Trigger | Starts the flow based on external events (e.g., webhook, user interaction). | ❌ False |

| Action | Executes a task or logic as part of the flow (e.g., API call, transformation). | ✅ True |

This node is an Action node that retrieves relevant information and generates contextually enhanced responses using AI models.

The RAG Node generates more relevant responses from a Language Learning Model (LLM) by using Retrieval Augmented Generation (RAG). This approach incorporates additional context retrieved from a vector database to improve the accuracy and relevance of the generated text.

The node allows users to configure parameters such as the embedding model, generative model, and query specifics to tailor the response generation process to their needs. By leveraging RAG, users can efficiently create context-aware, concise, and helpful responses, making it a valuable tool for projects requiring advanced AI-driven text generation.

Features

Key Functionalities

-

Database Integration: Allows integration with a specified database (e.g.,

IndexCrawler) to fetch relevant data for downstream processes. -

Dynamic Query Handling: Supports dynamic query generation using variables (e.g.,

{'{'}{'{'}}triggerNode.output.topic{'{'}{'}'}}) for flexible search and data retrieval. -

Embedding and Generative Models: Enables the use of embedding models (e.g.,

mistral-embed) and generative models (e.g.,mistral-large-2402) for advanced AI-powered search and content generation tasks. -

API Modular Configuration: Offers seamless customization with additional properties for tailored AI and search flow.

Benefits

-

Flexibility: Supports a variety of databases and AI models, making it adaptable for diverse use cases.

-

Efficiency: Utilizes embedding and generative models to provide relevant, high-quality outputs.

-

Scalability: Ensures the capability to handle large datasets and complex queries without performance issues.

-

User-Friendly Design: Simplifies workflow creation and configuration through an intuitive interface.

What can I build?

- Develop AI-driven chatbots that provide context-aware responses using RAG for improved user interactions.

- Create automated customer support systems that deliver precise answers based on user queries and contextual data retrieval.

- Build educational tools that generate tailored learning content and explanations by leveraging context from a vector database.

- Design content creation platforms that use RAG to produce more relevant and concise articles or reports based on user input.

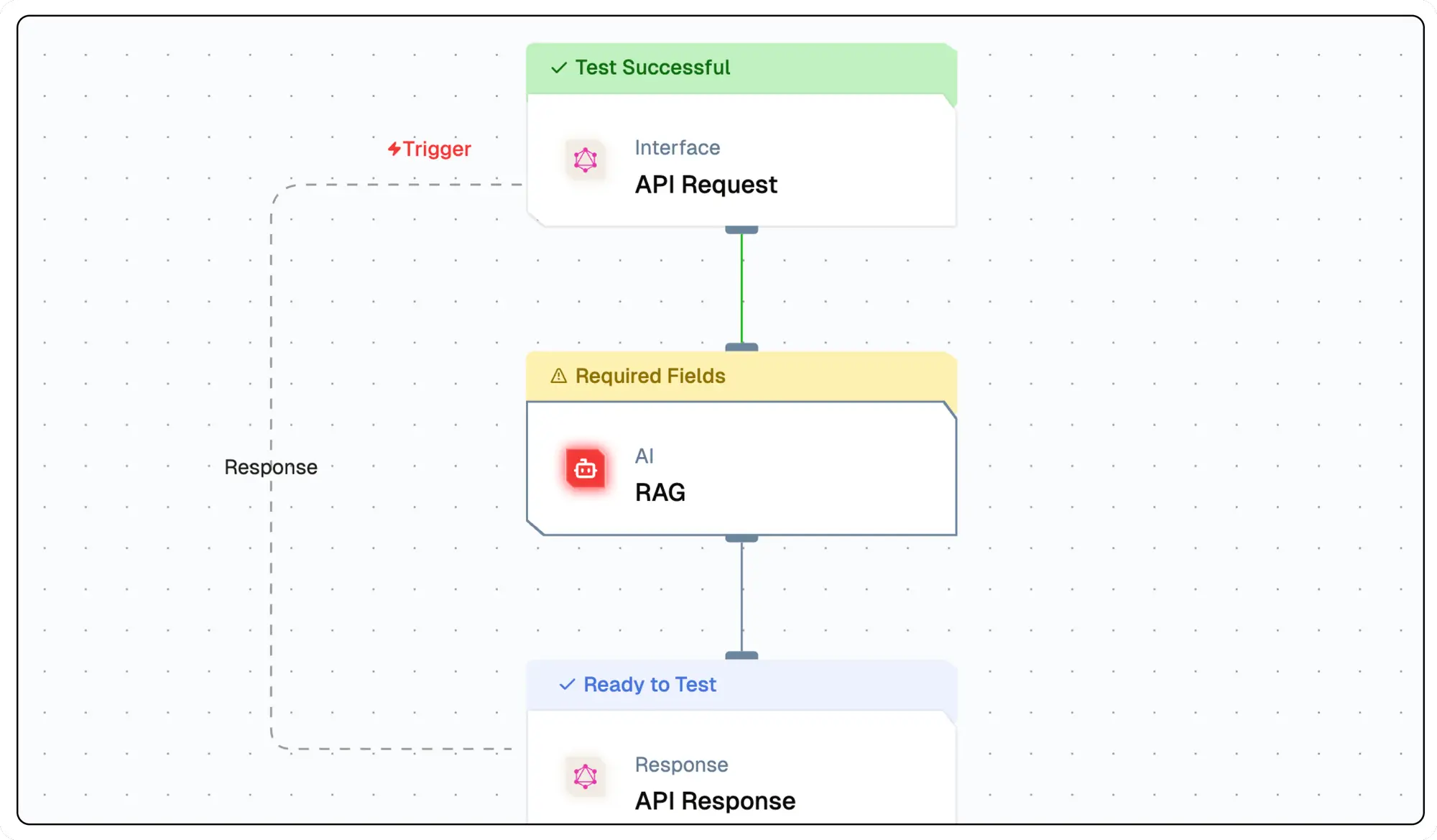

Setup

Select the RAG Node

- Fill in the required parameters.

- Build the desired flow

- Deploy the Project

- Click Setup on the workflow editor to get the automatically generated instruction and add it in your application.

Configuration Reference

| Parameter | Description | Example Value |

|---|---|---|

| Database | Select the vector database to be queried. | Database |

| Search Query | Input the query to search the vector database. | Tell me something about Bali |

| Embedding Model Name | This field allows the user to select the embedding model used to embed the query into vector space. It loads available embedding models through the listModels method. | Embedding Model Name |

| Generative Model Name | Select the model to generate responses from the query results. | LLM Model |

| System Prompt | System prompt to guide the LLM | You are Travel Planner |

| No. of References | Number of results to return | 3 |

| Certainty | It's the distance score into a value between 0 <= certainty <= 1, where 1 would represent identical vectors and 0 would represent opposite vectors. | 0.7 |

| Filters | Apply JSON-based filters to refine search results. | |

| Messages (History) | Pass your messages history here | [] |

Low-Code Example

nodes:

- nodeId: RAGNode_666

nodeType: RAGNode

nodeName: RAG

values:

systemPrompt: >-

You are a helpful AI assistant that answers user queries based on the

context provided to you.

vectorDB: IndexCrawler

queryField: "{{triggerNode.output.topic}}"

limit: "3"

certainty: "0.7"

filters: |-

{

"operator": "And",

"operands": [

{

"path": [

"title"

],

"operator": "Equal",

"valueText": "Test"

}

]

}

messages: "[]"

embeddingModelName:

provider_name: mistral

type: embedder/text

credential_name: Mistral API

credentialId: 32bf5e3b-a8fc-4697-b95a-b1af3dcf7498

model_name: mistral/mistral-embed

generativeModelName:

provider_name: mistral

type: generator/text

credential_name: Mistral API

credentialId: 32bf5e3b-a8fc-4697-b95a-b1af3dcf7498

model_name: mistral/mistral-large-2402

needs:

- triggerNodeOutput

modelResponse

- The AI-generated text response based on the retrieved information and reasoning.

references

- A list of source documents or data points used to generate the response.

_additional: Contains extra metadata about the reference.certainty: A confidence score (float) indicating how relevant the reference is.data: The extracted content from the reference (can be a string ornull).rr: An additional attribute or identifier, which can benull.certainty(duplicate at the top level): Same as the one inside_additional.

_meta

-

Metadata about the model's processing of the request.

-

prompt_tokens: Number of tokens in the input prompt. -

completion_tokens: Number of tokens in the AI's response. -

total_tokens: Sum ofprompt_tokensandcompletion_tokens. -

prompt_tokens_details: Breakdown of token usage in the prompt.cached_tokens: Tokens reused from cache.audio_tokens: Tokens from audio input (if applicable).

-

completion_tokens_details: Breakdown of token usage in the generated output.reasoning_tokens: Tokens used for reasoning.audio_tokens: Tokens from audio output (if applicable).accepted_prediction_tokens: Tokens from accepted predictions.rejected_prediction_tokens: Tokens from rejected predictions.

-

model_name: Name of the AI model used. -

model_provider: The provider or organization supplying the model.

-

Example Output

{

"modelResponse": "Response",

"references": [

{

"_additional": {

"certainty": 0.8730262517929077

},

"data": null,

"rr": "wr",

"certainty": 0.8730262517929077

},

{

"_additional": {

"certainty": 0.8614917993545532

},

"data": "info",

"rr": null,

"certainty": 0.8614917993545532

}

],

"_meta": {

"prompt_tokens": 112,

"completion_tokens": 111,

"total_tokens": 223,

"prompt_tokens_details": {

"cached_tokens": 0,

"audio_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"audio_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

},

"model_name": "gpt-4-turbo",

"model_provider": "openai"

}

}Troubleshooting

Common Issues

| Problem | Solution |

|---|---|

| Invalid API Key | Ensure the API key is correct and has not expired. |

| Dynamic Content Not Loaded | Increase the Wait for Page Load time in the configuration. |

Debugging

- Check Lamatic Flow logs for error details.

- Verify API Key.